Why we chose Haskell for our compilers at Imagine

Manu Gunther, Ale Gadea

Imagine Compilers TeamThe problem

Before joining this project, we worked on building several compilers and implementing domain specific languages (DSLs). In all those projects, the problem to solve was more or less the same: one studies with precision the domain, then distinguishes which are the relevant components of the problem to define the syntax of the DSL, and picks a target language to give meaning/semantics to the syntax constructions.

The idea of the Imagine compiler was from the beginning ambitious: we had to capture the concepts present in any web application, define a good representation of them via some kind of DSL and then translate it to human-readable code for different frameworks. This is no easy task: although most frameworks are very similar in functionality, each one has its own idiosyncrasies, its own way of organizing components, its own representation of different concepts, etc. So, some of the challenges in comparison with our well-known recipe was that:

we don’t only use the target language to give semantics, but also as a kind of scaffolding for the developer to learn and build on top of this. The implications of this are, as we already mentioned, the code must be a good-quality, exemplary, human-readable code.

we don’t have only one target language, but as many as the universe of developers we want to cover.

Why Haskell?

Our first decision was about the programming language for implementing this code-generator. The possibility to define an Abstract Syntax Tree (AST) and easily inspect each abstract construction (i.e. characteristics of a language with native support of DSL implementations), also run different checks inside a strongly typed context were requirements for us. So, the answer to the question “Which programming language is the best for our task?” came up immediately: we definitely wanted functional programming, and given that we had enough experience with Haskell and is a widely used and supported language, we chose it. Clearly, Haskell has a lot more important features that were very useful on the implementation of the compiler, like total control about the effects on functions, all kind of monads, etc. but this post is about the design of the Imagine compiler and not Haskell publicity.

Compiler phases

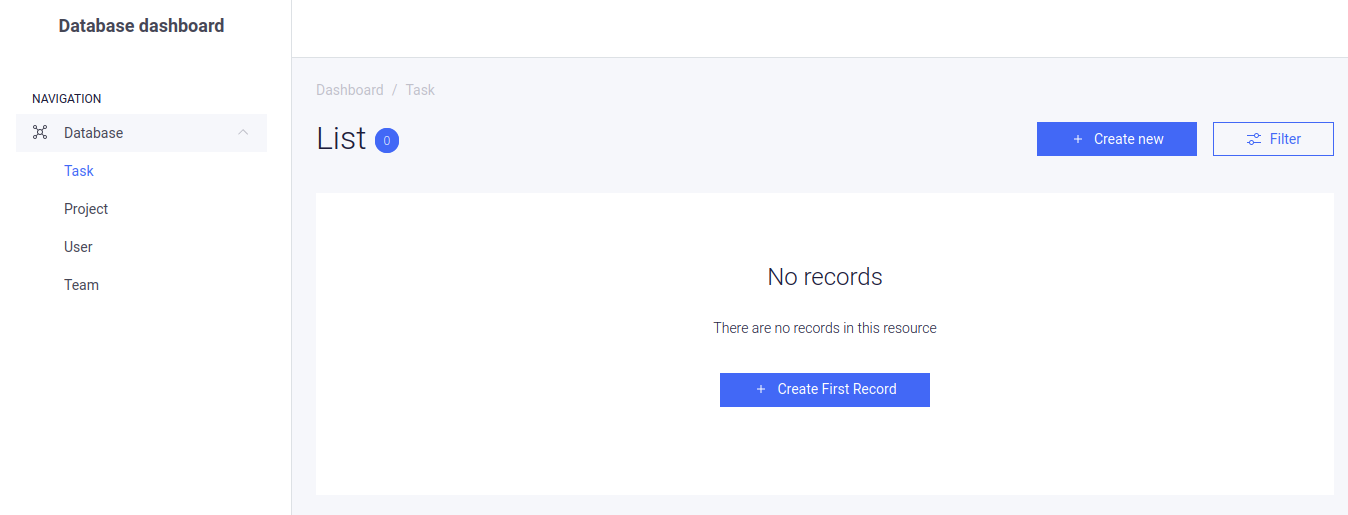

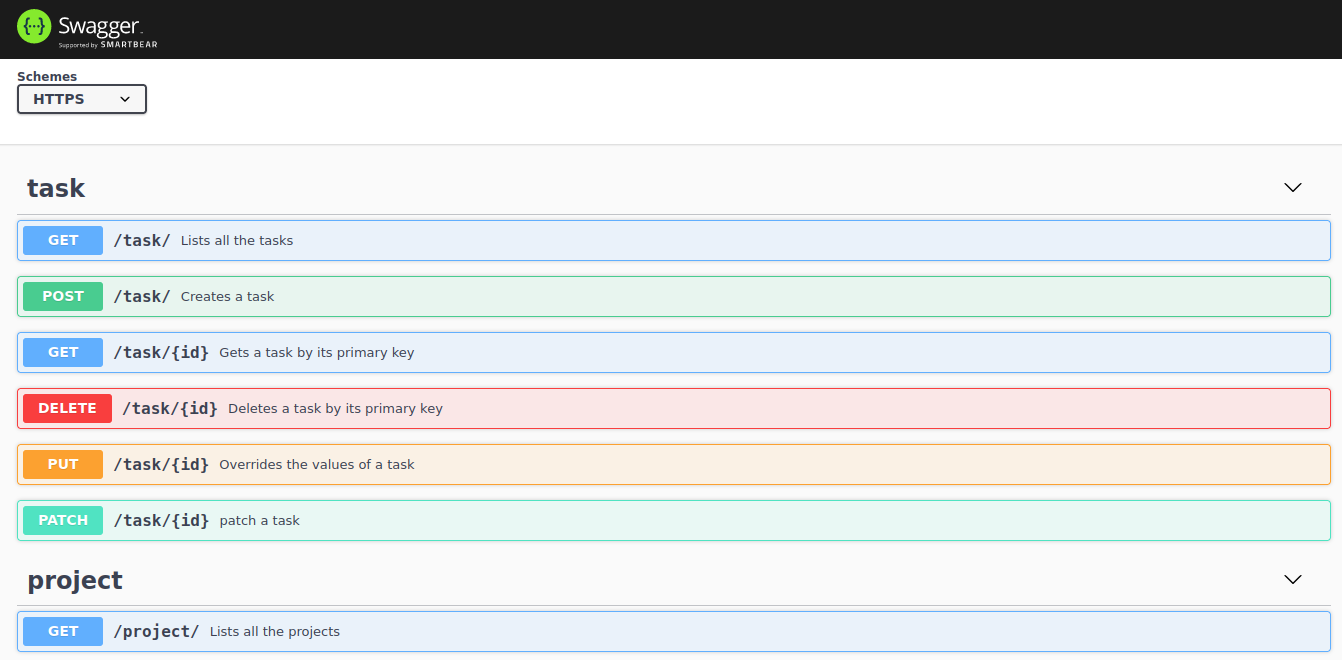

Every compiler is divided in different phases. The Imagine compiler is not an exception. From the beginning we decided to define a deep embedding: the imagine specification language consists of a Haskell AST. This specification language captures the different dynamic components of a web application like the datamodels, api endpoints, etc. From this specification, the imagine-compiler produces a complete project in the target framework (for now Django and Node.js).Having an AST gives the ability to define different concrete representations of the source language which will have the same abstract representation. Currently, we support a text representation and a UI based representation.

The first compiler phase consists of parsing, here we translate the concrete source representation into the abstract one that we call ImagineSpec. Clearly, for each different concrete representation, we need to define a different parser.

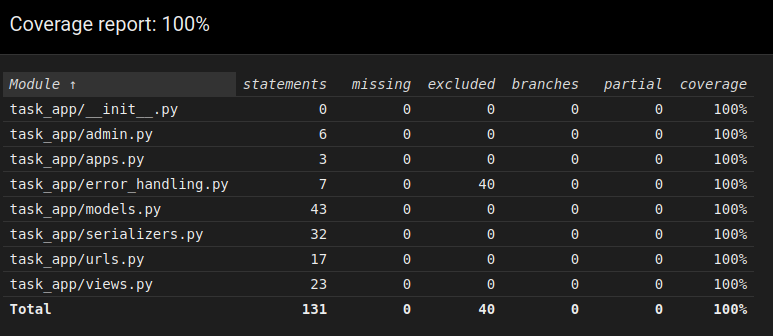

Once we have the abstract representation of the source specification, the second phase consists of doing static checks. We want to ensure that any specification produces working code in the supported frameworks, so we perform different checks over the input before generating code. Here, for instance, we do from simple checks like name clashing to some more complex ones like avoiding circular relations, among others.

The last phase is code generation. Once we have a correct abstract representation of the source specification, we produce a complete project in the specified framework.

Code generation

Our first attempt was to define an abstract representation for the target languages of our compiler. We started researching the structure of Django and Node.js projects, and other backend frameworks, with the support of experts in all of them. We realized that in general, a complete web application has a lot of static code, coming from a couple of settings. Then, a considerable amount of components are built based on the information coming from the specification. We could consider the target code like static code with “holes” which will be filled with the information coming from the source specification. For instance, if we have specified a simple model with some fields, let say

then a corresponding implementation in the ORM of Django and sequelize/Node.js could look like this

Django:

Node.js:

so from a closer look at these implementations, we can identify some “holes” and generalize the structures to give birth to the templates

Django:

Node.js:

Thus, we decided to use a template based approach using mustaches templates. In the code generation phase we write templates corresponding to code-blocks and files of the target language. These templates are rendered filling the holes with the corresponding information from the imagine spec. By serendipity now you don’t need to be a Haskell expert to implement some code generation.

In summary

Haskell allows us to implement a well modularized compiler, with separate phases in which the abstract representation of the input is the most important component. Strong types allow us to detect errors in a very easy way, and ensure correctness on each phase.